The analog vs digital audio debate is very polarizing. I have noticed that most people defend one side or the other, allowing little room for nuance.

Which is better – analog or digital audio? Well, it depends on who you ask…

If you ask me, I’d say that both analog and digital have benefits and drawbacks. By the end of this post, you’ll understand the most important differences so that you can decide for yourself.

What is the main difference between analog and digital audio?

The difference between analog and digital audio is found in the way audio information is stored. Sound waves are a series of vibrations through a medium. Analog audio recording technology stores this information by creating a series of magnetic charges along a reel of magnetic tape. Digital audio technology stores audio information as a series of numeric values on a hard drive.

In this post, you will learn the pros and cons of each recording method along with the difference between analog and digital audio technologies for live sound applications.

The difference between analog and digital audio is found in the way audio information is stored. Analog audio recording technology stores this information by creating a series of magnetic charges along a reel of magnetic tape. Digital audio technology stores audio information as a series of numeric values on a hard drive.

The information in this post was written to be as easy-to-understand as possible. Some concepts in this article will make more sense if you have a basic understanding of how sound works. If you find any of the following sections confusing, feel free to reference this post I wrote on Audio Basics.

Before I begin discussing the differences between digital and analog audio systems, I think it’s important to mention that all digital audio systems include some analog audio technology.

Microphones are analog audio devices that transduce acoustical energy into an analog electrical signal. Preamplifiers, power amplifiers, and loudspeakers are all analog devices, as well.

The primary focus of this section will be to highlight the key differences in analog and digital recording technology.

Analog Audio Explained

Let’s start by taking a look at the most common analog audio formats – tape and vinyl. It will help if we have a basic understanding of how these formats work.

Tape

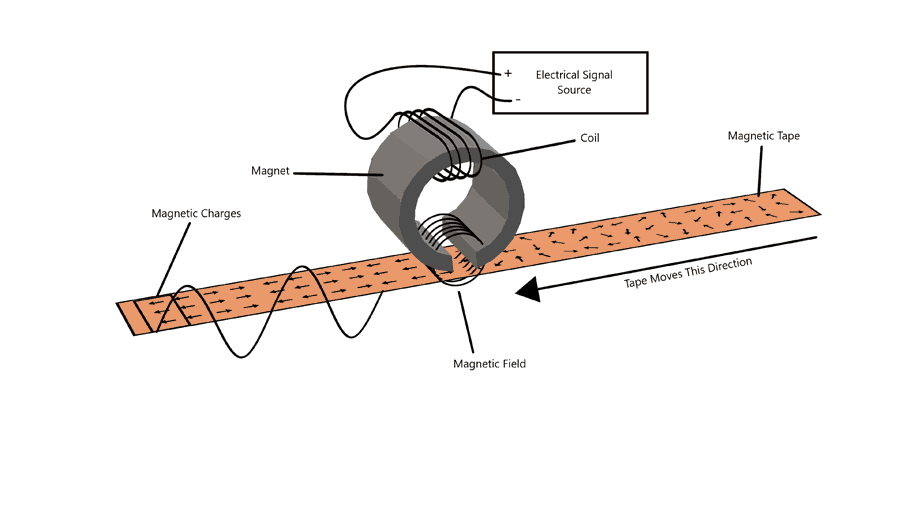

Magnetic tape is the highest-quality method for analog audio recording and playback. Tape machines operate on the following principle: when an electric current is sent through a wire, a magnetic field forms around the wire, and vice versa.

A tape machine allows us to take the waves of alternating electrical current from a microphone and store them as charges along magnetic tape. When you play that magnetized tape back through the tape machine, it is converted back into electrical currents that can be played through a speaker.

To record audio, a tape machine sends electrical audio signals through a coiled wire surrounding a magnet which is held in close proximity to magnetic tape. This coil of wire surrounding the magnet is called the record head.

As the tape passes through the magnetic field created by the record head, the particles along the tape are magnetically charged. The pattern of the magnetic charges along the tape resembles the audio signal sent through the coil of wire.

The amplitude of the audio signal correlates with the magnitude of the magnetic charges created on the tape.

To playback the audio, the process is reversed. The magnetized tape creates an electric current on the play head, which connects to an amplifier to be played through speakers.

There are various types of tape and tape machines which affect the quality of the audio recorded. The two main variables are tape speed and tape width.

Tape Speed

The rate at which the tape passes the record head affects the quality of the recording. A faster tape speed produces a recording with greater frequency response, less hiss, and shorter dropouts.

Tape machine speed is measured in inches per second (ips). Common tape machine speeds are 7-½ ips, 15 ips, and 30 ips. The standard for professional recording is 15 ips.

Tape Width

The width of the tape also affects the quality of the recording. Wider tape allows for a higher-quality recording.

However, tape width can be utilized to record more tracks rather than improving the audio quality of a single track. This allows several sources to be recorded and played back independently.

Vinyl

Vinyl records are the standard consumer medium for analog audio recordings. In an effort to distribute audio on a large scale, recordings can be copied from analog tape onto vinyl records.

Although the sound quality of vinyl isn’t as good as the original tape, vinyl is easier to mass produce, it takes up less space, and it’s more durable.

Compared to tape, vinyl records are less vulnerable to the elements. Whereas tape can be destroyed by magnetic exposure, vinyl records are immune to magnetic fields because they use a different means of audio storage.

Rather than magnetic charge, the textured grooves on the surface of vinyl records store the audio information.

As a vinyl record spins at a specific rate, a stylus travels through the grooves on its surface. As the stylus moves back and forth with the grooves, it creates an electric current in a wire which connects to an amplifier to be played through speakers. The amplitude of the audio signal is correlated with the intensity in the movement of the stylus.

You can see an animation of how a vinyl record works by Animagraffs. Animagraffs is a website that creates amazing animations of various technologies.

Vinyl records are used only for playback in the modern world. Analog recordings are made with magnetic tape. The tapes are used to create casts for pressing the information to vinyl discs.

Digital Audio Explained

Of course, most modern music is recorded using digital technology. Let’s look at the basic principles of digital audio so that we can better understand how it compares to analog.

PCM (Pulse Code Modulation)

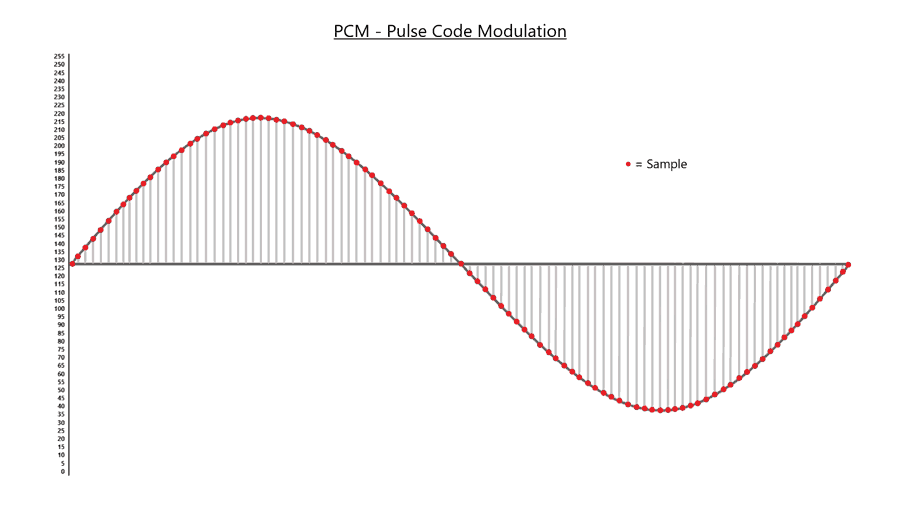

PCM, or Pulse Code Modulation, is the standard method for encoding audio signals into binary information.

In analog audio recording, a model of the sound waves is created using magnetic charge. However, PCM creates a model of the sound waves by storing a sequence of numerical values that represent the amplitude at various points along a wave.

These values are represented by groups of binary bits, called samples. Each sample represents a numerical value within a predetermined range of possible values. This process is called quantization, and is performed by an analog-to-digital converter (A-to-D converter).

During playback of a digital recording, the samples are converted back to electrical signals and sent to speakers. This process is performed by a digital to analog converter (D-to-A converter or DAC).

Here is a simplified illustration of how audio waves are stored using digital samples:

Bit Depth

Each sample represents a value within a range of possible values. The range of possible values is determined by the bit depth. Bit depth is the term that describes how many bits are included in each sample.

Each bit can represent two possible values. Recordings which utilize more bits per sample can represent a larger range of values and have a much lower noise floor than recordings with less bit depth.

Each time a bit is added, the number of possible values is doubled. Whereas one bit can represent two values, two bits can represent four values, three bits can represent eight values, and so on.

| Bit Depth | Possible Values |

|---|---|

| 1-bit | 2 |

| 2-bit | 4 |

| 4-bit | 16 |

| 8-bit | 256 |

| 16-bit (CD Standard) | 65,536 |

| 24-bit (Professional Standard) | 16,777,216 |

The standard bit depth for CDs is 16-bit, allowing for 64,536 possible amplitude values. The professional standard is a bit depth of 24-bit, which allows 16,777,216 possible amplitude values! Most professional studios record and mix using 32-bit floating point.

Sample Rate

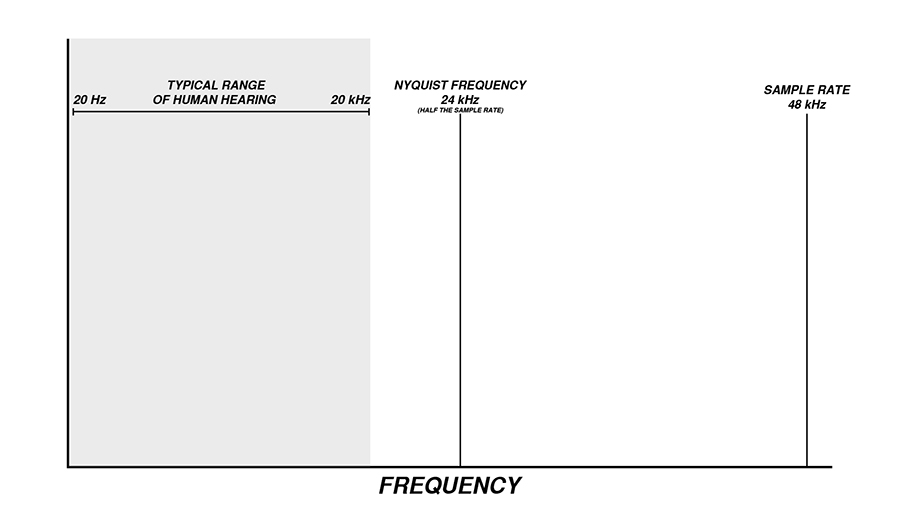

The sample rate determines how many samples are taken of a sound wave per second. Sample rate is measured in Hertz (Hz). Recording at a higher sample rate allows higher frequencies to be recorded.

The Nyquist Theorem states that digital sampling can only faithfully represent frequencies less than half of the sampling rate. This means that if you want to capture 20kHz, the highest frequency audible to humans, you must use a sample rate greater than 40kHz.

For this reason, 44.1kHz is the standard sample rate for CDs. Professional audio for video utilizes a standard of 48kHz. Many recordings greatly exceed these standards, with sample rates of 96kHz and beyond!

While the benefit of higher sample rates is often understood to be an extension of recorded frequency range, this isn’t the main benefit.

I won’t get too deep into it in this post, but it has more to do with the type of anti-aliasing filter that can be used to filter out higher frequencies with fewer artifacts. The resulting audible bandwidth of a 44.1 kHz recording and a 96 kHz recording are ultimately the same.

Digital Audio Data Compression Formats

The audio files produced by recording studios are very large, due to the amount of information they contain. If a 3-minute song is recorded with a bit depth of 24-bit and a sample rate of 96kHz, the file size will be approximately 52MB.

In the early days of the internet and portable music players like the iPod, the full uncompressed PCM data was too large to be streamed over the internet or stored on a small hard drive.

In an attempt to make the file sizes smaller, we used data compression. This is not to be confused with dynamic range compression – in fact, you can learn all about data compression in another Audio University post on Lossy vs Lossless Audio.

Data compression is a method of reducing the size of a file. There are two main categories of data compression formats, lossy and lossless.

Lossy Data Compression Formats (MP3 & AAC)

Unfortunately, the most widely used data compression formats in consumer audio are lossy. This means that, although special algorithms are used to reduce negative effects, data is lost during the process of compressing the file.

If information is lost through the process of compressing data, the compression format being used is lossy. Once data is lost, it can never be restored.

The most common lossy audio data compression formats are MP3, AAC, and Ogg Vorbis. These formats are used for storing many files with limited hard drive space or streaming content over limited-bandwidth internet connections.

The proprietary algorithms behind these formats aim to prioritize content based on models of human perception of sound and destroy the low-priority content.

Lossless Data Compression Formats (FLAC & AIFF)

If no information is lost through the process of compressing data, the compression format being used is lossless.

Some streaming services, such as Tidal, utilize lossless compression. Using these formats, information can be encoded into a smaller file and later decoded, ultimately restoring the original PCM information as a WAV file.

Although these formats do save some space compared to uncompressed files, they are nowhere close to the efficiency of lossy formats.

Currently, we are starting to see streaming platforms offer lossless audio streaming, which means there is no quality loss compared to the original uncompressed version. However, over the last few decades most platforms have only offered lossy audio, which can drastically reduce sound quality.

Unfortunately, this has given digital audio a bad reputation. But stay with me, and you’ll see that digital audio isn’t as bad as you might believe!

Analog vs Digital Audio: Pros & Cons

As you can see, analog and digital audio recording technologies share a common goal – to create a model of acoustic waveforms that can be played back as accurately as possible. Each technology accomplishes this goal quite well.

The audio quality achieved using one method is not necessarily better than the other, just different. The unique qualities of each method will be explored in this section.

There are some details that we need to consider when deciding which format is better for the task at hand.

Noise

One of the biggest differences between analog and digital audio is the noise level. If you’re an audio enthusiast, you’re probably familiar with the concept of a noise floor and the signal-to-noise ratio.

Beneath the audio signal, whether it be music, sound effects, or spoken word, there is noise. That noise can come from the recording environment, problems with the system, nearby electronics, or a number of other sources.

Even if you eliminate all noise from the room, you’ll still be left with the inherent noise from your recording medium.

Ideally, we want the signal-to-noise ratio to be as large as possible. This is where analog audio really falls short of digital audio.

The inherent noise of both analog tape and vinyl is significantly greater than the inherent noise of a typical digital audio system. I’m sure you’ve heard the sound of tape hiss on an old recording or the clicks and pops of an old vinyl record.

Don’t get me wrong – this can add a nostalgic element to the listening experience. But let’s be honest, wouldn’t it be better if there was no noise at all?

Digital audio has a noise floor, but it is extremely low. The theoretical noise floor of a 24-bit digital recording is -144 dB FS, which is practically non-existent.

When it comes to noise, there is no question – digital wins.

Fidelity

Secondly, let’s compare the signal fidelity between analog and digital audio.

We first need to define “fidelity”. According to Oxford Languages, fidelity can be defined as “the degree of exactness with which something is copied or reproduced”.

So the question here is “Which format can capture, store, and reproduce the most accurate representation of the original input signal?”.

I’ve heard many justifications as to why analog audio is better than digital audio. Over time, I’ve learned that most of these justifications are grounded in a misunderstanding of how digital audio works.

This is where I might upset some people, but remember that I’m just laying out the facts to the best of my ability…

One of the most common misconceptions has to do with the resolution of a digital audio waveform. In fact, I used to believe this one myself.

The misconception is that an analog-to-digital converter isn’t capable of capturing a perfectly accurate waveform. The logic behind this belief seems valid at first glance…

“If a digital audio system only takes a finite number of snapshots (or samples) every second and each sample can represent only a finite number of values, how could it possibly create an infinitely smooth waveform like the original sound wave?”

To understand the answer, we can look to the Nyquist Theorem. I already mentioned the Nyquist Theorem above, but let’s take another look.

The Nyquist Theorem states that a waveform can be captured and reproduced with no loss as long as the waveform is sampled at a rate beyond twice per cycle. Therefore, the sample rate of an audio file determines the highest frequency that can be sampled with no loss.

In theory, an audio file with a 48kHz sample rate is capable of perfectly recording and reproducing frequencies up to (just below) 24kHz – half of the sample rate.

Given that the range of human hearing and low pass filters within microphones and other audio equipment generally top out around 20kHz, we can safely say that a 48kHz sample rate provides adequate frequency range.

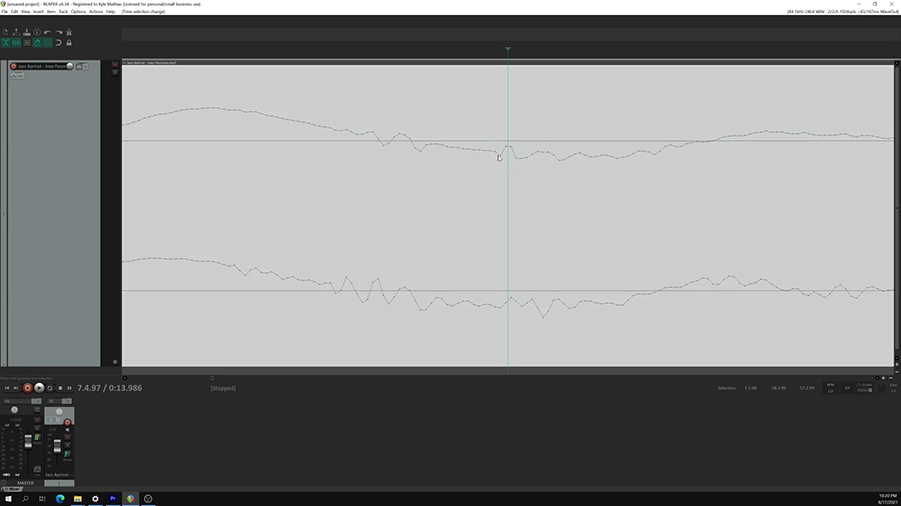

Taking a close look at the samples within the DAW, we can see that they are represented as a series of dots connected by straight lines. This representation can be misleading…

While it’s true that the samples are just a series of dots that represent the position of the waveform at each passing moment in time, the resulting sound wave won’t look jagged like these.

The truth is that the waveforms played back through the digital-to-analog converter will be identical to the original waveforms recorded by the analog-to digital converter.

So long as the highest frequency doesn’t surpass the Nyquist Frequency, there is only one pathway the waveform can take. Now, I’ll be honest here… I still have trouble fully wrapping my head around this part.

That’s why I want to recommend a video by Monty Montgomery on xiph.org.

It’s free to watch and it’s widely regarded as one of the best videos for debunking the myth that analog audio is somehow “smoother” or “higher resolution” than digital audio.

I find myself rewatching this video every year and each time I catch on to things that didn’t make sense before. So, don’t feel bad if you don’t fully understand everything your first time watching.

Aliasing

There is another drawback to digital audio that is important to mention, called aliasing. It also has to do with the Nyquist Theorem.

If the analog-to-digital converter attempts to sample a frequency that exceeds the Nyquist frequency, it will result in aliasing.

On a basic level, aliasing describes a situation where the A-to-D converter confuses a high frequency for a much lower frequency due to sample rate restrictions.

In an effort to mitigate this problem, engineers will apply low pass filters to the signal chain which prevent frequencies that exceed the Nyquist frequency from being quantized by the system.

For this reason, we don’t use a sample rate of 40kHz to capture a 20kHz signal, but instead use sample rates like 48kHz, 96kHz, or beyond. This provides space for the low pass filter to remove extraneous frequencies without negatively affecting the audible frequency range.

This is another area that can become very complicated the deeper you dive into it – so I’ll point you toward another video that explains the concept extremely well.

The video is by Dan Worrall and it can be found on the Fabfilter YouTube channel. If you’ve ever wondered how high you should set the sample rate, you’ll find that video very enlightening.

Harmonic Distortion & Non-Linearity

OK – aside from the noise, the signal fidelity of analog audio vs digital audio seems pretty close. But what is it about analog that makes it sound “warmer” or “better” to many people?

It can probably be boiled down to the distortion and noise that comes with using analog equipment.

As we already discussed, each time you pass a signal from one device to another, the inherent noise from each device will become a part of the signal. Not only that, but analog circuitry will also add harmonic distortion to the signal, which isn’t always a bad thing.

In terms of fidelity, this is another win for digital audio, because the signal can be processed with digital signal processing which doesn’t really suffer from noise and harmonic distortion that you get through analog processing.

So, if you’re going for transparency, digital audio is superior to analog.

But like I said before, the harmonic distortion associated with analog audio isn’t necessarily a bad thing. Sometimes you’re not going for a transparent sound and the way analog equipment colors the signal might be desirable.

Overdriving an analog system or saturating analog tape can sound awesome! Analog gear tends to sound more musical and organic when overdriven.

As you approach the limits of analog circuitry or tape, the quality of the signal will start to change. This nonlinear response to signals is something that can be modeled by digital systems, but is built into analog systems.

Digital systems will behave the same no matter where the signal level is in relation to the limitations until the signal actually exceeds those limitations. When the signal level in a digital system exceeds 0 dBFS, it immediately causes clipping, which sounds terrible 99% of the time.

I’m going to classify this detail as a win for both digital and analog. On one hand, it’s nice to work within the linear and transparent digital environment for achieving the best possible fidelity. On the other hand, the tones you can achieve with skillful gain structure in the analog realm can also be great.

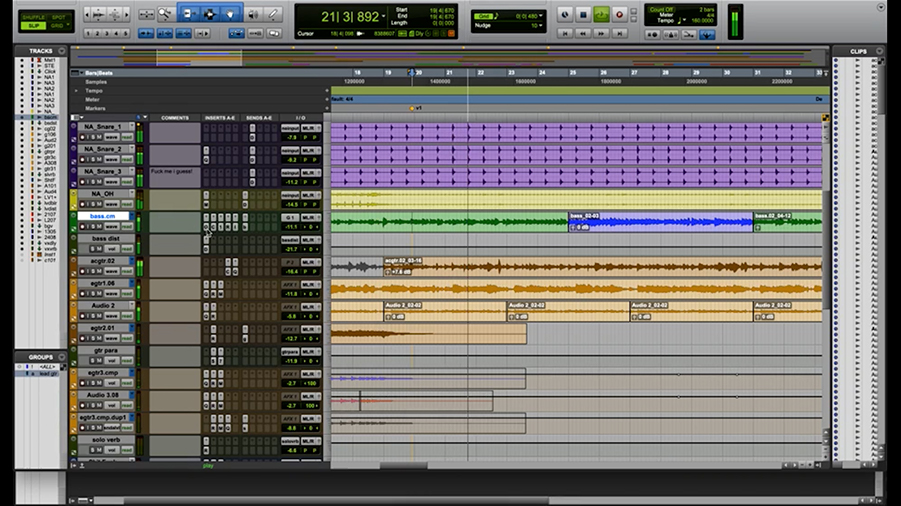

Production & Editing

While we’re on the subject of the differences in the production process between analog and digital audio, there are some things worth considering.

If you’ve been working in audio production for over 25 years, you already know where I’m going with this. If you’re relatively new to the industry, this might not be so obvious.

We already discussed the general process of analog and digital recording. In analog – the signals are stored to magnetic tape and played back using a tape machine. In digital – the signals are stored to a hard drive and played back using a computer.

Aside from the basic recording and playback process, there are a lot of differences between analog and digital music production.

One of the biggest differences is the editing process. Listen closely here, because the benefit of digital audio can’t be overstated when it comes to editing!

In a digital audio workstation (or a DAW), we can make cuts and adjustments to the length of a clip without any worry of making an irreversible mistake. In the non-destructive digital editing environment, we can always just press the “Undo” button and get back where we left off.

Analog edits have to be performed with a razor blade and adhesive tape to physically cut the tape and reassemble it. Unlike using a DAW, an analog tape editor has to rely solely on their ears to know where the cut should be made, and they have to create diagonal cuts to achieve a crossfade between the two clips.

Anyone who has worked with analog tape has probably found themselves in the unfortunate situation where the tape is unravelled from the reel, and the next hour is spent meticulously rewinding the tape.

If you mess up in analog editing, there might not be a way to salvage your work.

Not only that, but there are virtually no limitations to the number of tracks that can be stacked in a digital production, while most multitrack analog tape tops out at 24 tracks.

These advancements in technology have completely revolutionized not only music production, but music itself! Think about it – the ability to make precise edits to multitrack recordings and record as many takes as you want with no negative consequences. These benefits have opened the doors to much more elaborate and polished recordings using digital audio.

Portability & Durability

Once the production process is complete, we still need a way of distributing that production to millions of listeners. This is easily the biggest difference between analog and digital audio.

The best method for distributing analog audio is vinyl. Tape is bulky, in addition to being more difficult to reproduce and maintain. However, vinyl has its share of disadvantages too.

Firstly, vinyl is a physical medium which means each copy is costly to produce and needs to be physically shipped to listeners.

Secondly, vinyl changes over time. Not only is there a quality loss when the master tape is printed to vinyl, but everytime you listen to the vinyl record the quality degrades even further.

Today, digital audio can be streamed over the internet with no loss. You can instantly distribute infinite copies across the world with no quality loss.

Not only that, but an audio file can be listened to again and again without quality loss or damage.

Plus, digital files take up very little space compared to vinyl. When you compare the space it takes to store 1000 songs on vinyl versus 1000 songs on a hard drive, it’s no contest.

The Debate Continues

The truth is that both analog and digital audio systems have value in the modern world. The debate over which is better and which is worse will never end, because there is not a clear answer.

There are a million applications for audio technology, and each one calls for a unique set of equipment. As an audio engineer, musician, or listener, we must each decide on a set of audio equipment that caters to the needs of each unique situation.

What Really Matters…

While I love talking about the subtle things, such as the differences between analog and digital, many of these details are relatively insignificant when it comes to sound quality.

If you really want the best sound quality possible, I’d recommend focusing on more important things like setting up your speaker placement properly.

This will make a huge difference and it’s completely free! In fact, I want to give you a free gift for making it this far in the post.

Follow this link to a free speaker placement guide that will help you to ensure your system is set up for optimal performance.